Program context: GACC PIT Registered Apprenticeships

GACC PIT operates a portfolio of state-registered apprenticeship programs serving advanced manufacturing, industrial automation, and technical occupations across Western Pennsylvania. With 143 active apprentices across seven pathways—from Mechatronics Technicians to Electric Vehicle Automotive Technicians—GACC supports employers operating in complex, high-skill environments.

In registered apprenticeships, the logbook is the system of record for on-the-job training. In advanced manufacturing environments, traditional logbooks—paper, spreadsheets, or loosely structured digital tools—are typically completed late, in bulk, and under deadline pressure. The result is subjective, low-fidelity evidence that satisfies reporting requirements but offers little operational value.

For employers, apprenticeship investment is only valuable if it produces verifiable skill acquisition, productive work, and workforce readiness. As programs scaled across multiple employers and job roles, a central challenge emerged:

How do employers see—clearly and continuously—what apprentices are actually learning and contributing on the job?

GACC took a different approach. Rather than treating the logbook as paperwork, they treated apprenticeship as a production system—one that can be instrumented, validated, and governed in real time.

The objective was clear: make compliance a natural outcome of daily operations, not a quarterly scramble.

What GACC built: a closed-loop observability system

GACC's observability model is not a dashboard layered on top of reporting. It is a closed-loop system that captures work as it happens, validates it continuously, and surfaces risk early.

VELA transforms on-the-job training from a black box into a continuously observable system of work and skill development.

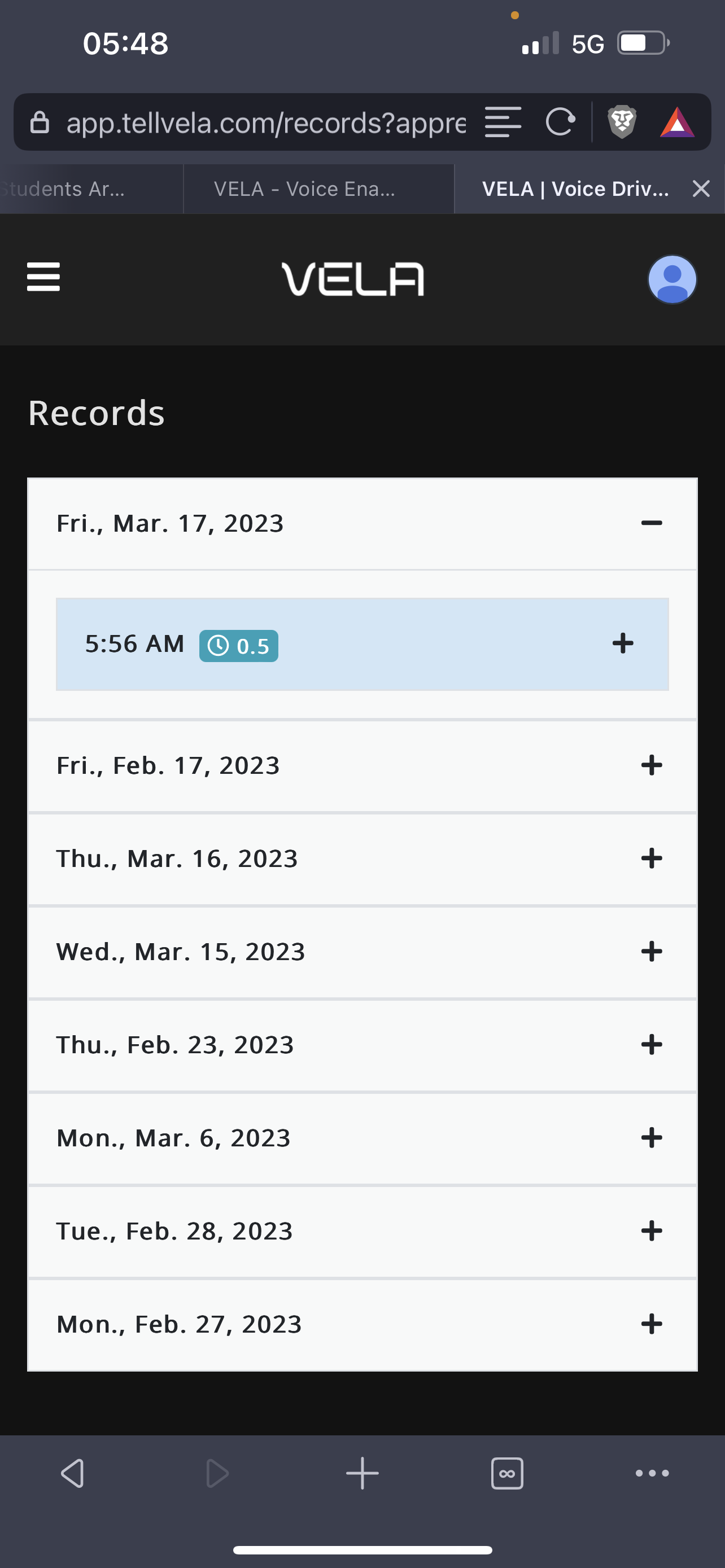

1. Edge capture: work → evidence

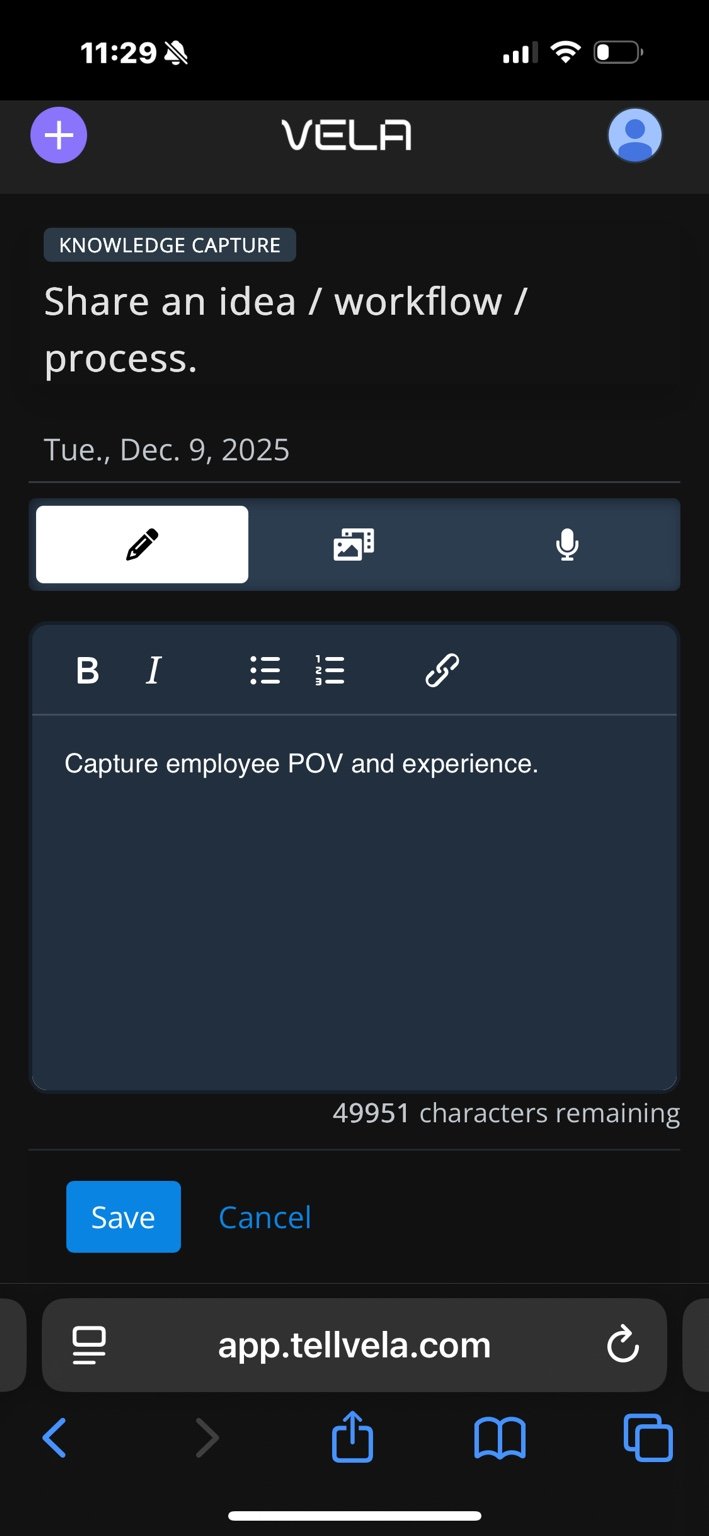

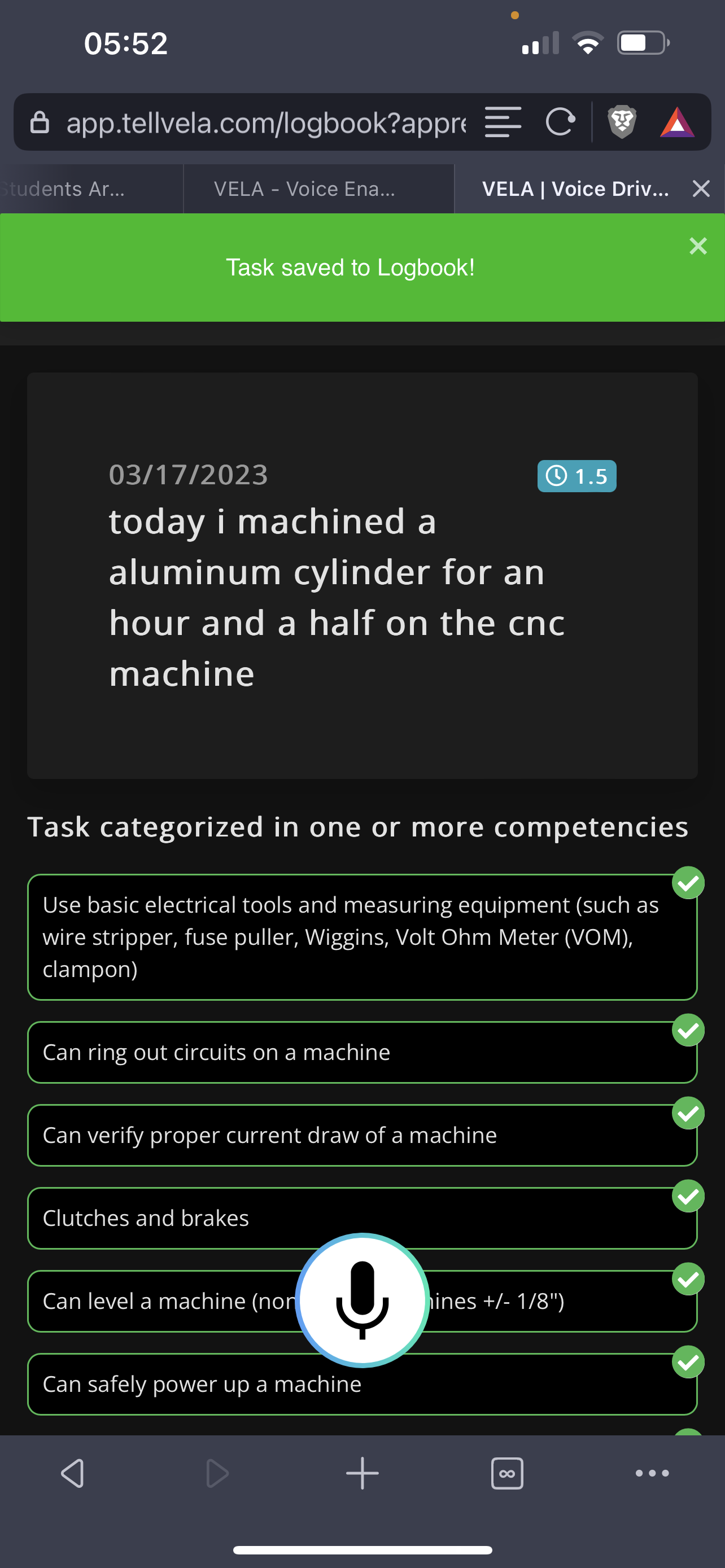

Apprentices log OJT at the point of work using VELA's shop-floor–friendly workflows, including hands-free and voice-enabled capture. Evidence is created in context, not reconstructed weeks later.

- Voice-enabled capture: Apprentices document real work tasks without interrupting productivity

- Immediate evidence creation: Each entry becomes a verified record of work performed and skills demonstrated

- Competency-aligned signals: Work activity is directly tied to job-relevant competencies

This shifts logging from a compliance task to a natural extension of work. For employers, training time is no longer abstract—it is visible, attributable, and defensible.

2. Structured evidence: evidence → signals

Every logbook entry is normalized into structured signals:

- 100% transcript coverage ensures each record contains narrative evidence of work performed

- 99.9% competency tagging links work directly to defined skills, enabling traceability from task execution to competency demonstration

This structure is foundational: it makes apprenticeship data queryable, comparable, and auditable at scale.

3. Transcript Quality Intelligence: coverage → evidence strength

Coverage alone isn't enough. Unlike traditional digital logbooks that merely record hours, VELA ensures what gets logged is useful, auditable, and defensible.

VELA analyzes transcript quality at scale through its Transcript Quality Index (TQI), distinguishing between:

- Minimal activity notes — brief, low-detail entries

- Clear task descriptions — actionable documentation of work performed

- Audit-grade narratives — entries with actions, measurements, and outcomes

The TQI evaluates each entry for evidence strength by detecting:

- Action verbs — what was done

- Measurements and units — how much, how long, to what tolerance

- Outcomes — what changed, what improved, what was fixed

- Role-specific technical language — terms that reflect actual job functions (PLC troubleshooting, injection molding, mold changeover)

This transforms subjective "good notes" into objective, coachable signals—creating a defensible trail from work performed → competency demonstrated → supervisor verified.

What the underlying transcript data actually looks like (GACC-only, excluding MCT): a DuckDB analysis of 16,850 transcripts (generated 2025-12-17) shows that while coverage is complete, brevity is common—median 9 words (51 chars), and 16.64% of entries are very short (≤20 chars).

That’s exactly why TQI matters: it distinguishes participation from evidence strength and turns “short notes” into a measurable coaching signal.

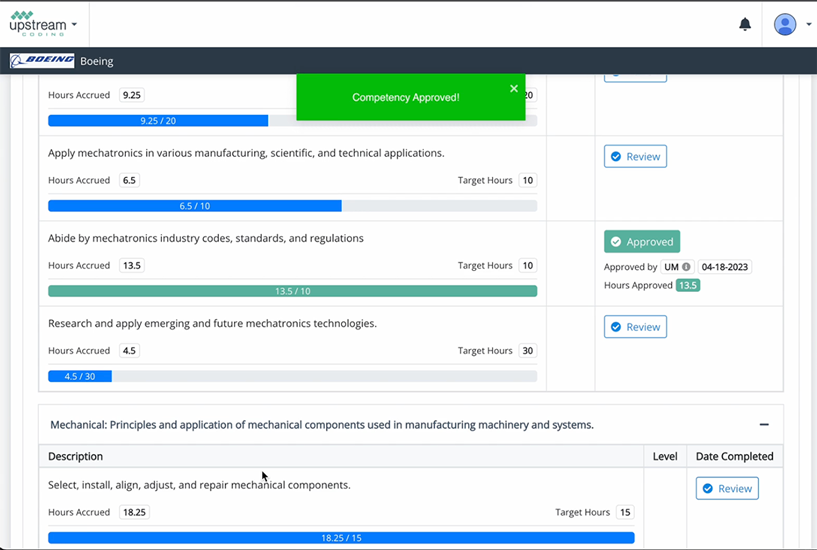

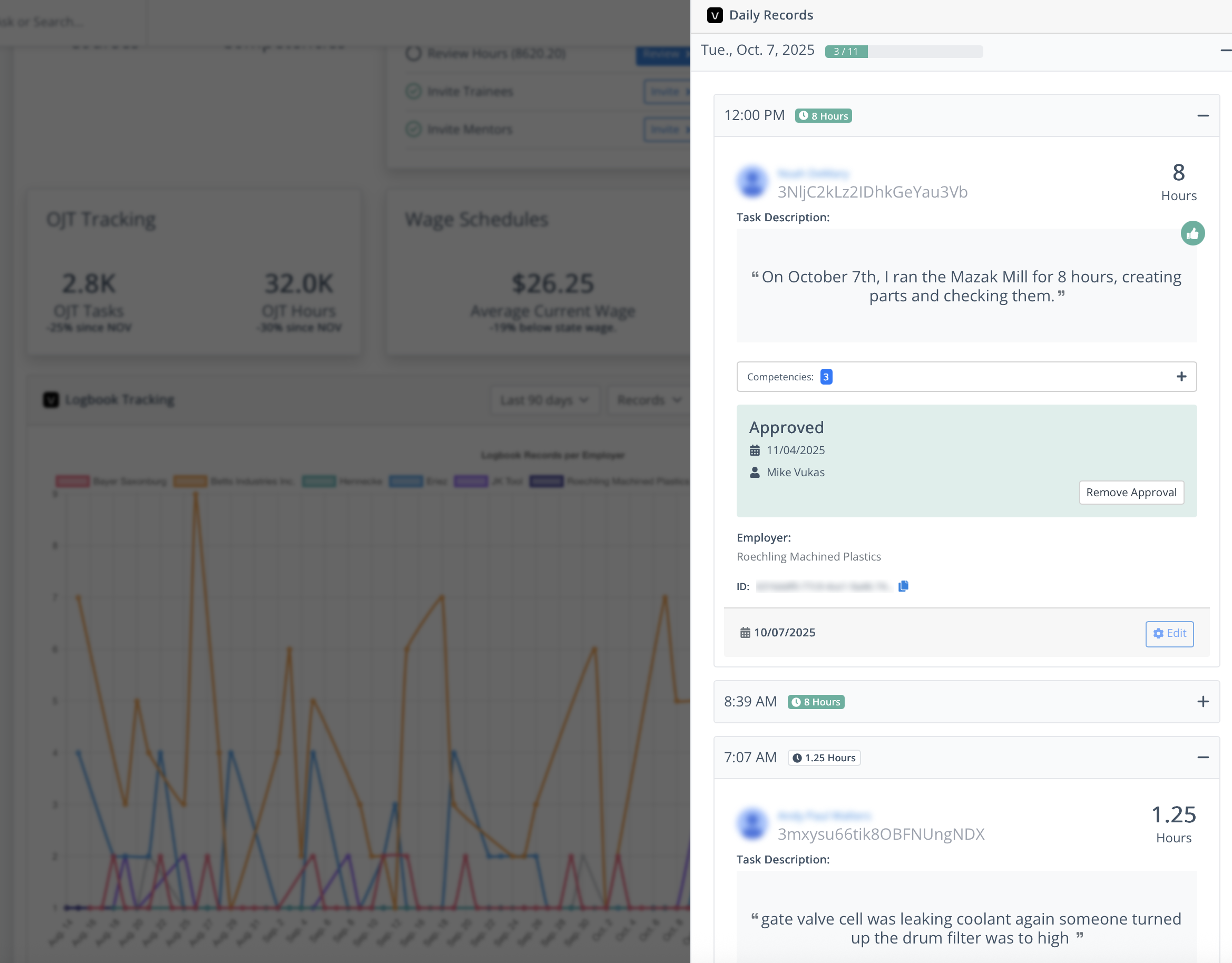

4. Continuous verification: signals → validation

Supervisors review and approve records directly in the system, creating a permanent validation trail. Approval behavior becomes visible and measurable, rather than hidden until reporting deadlines.

Across the portfolio, GACC maintains an 88.75% supervisor approval rate, turning verification into an operational signal rather than an administrative afterthought.

Approval confirms participation. Quality intelligence confirms evidence strength.

VELA separates verification from narrative richness—allowing programs to maintain high approval rates while continuously improving documentation quality. This dual-signal approach means supervisors aren't burdened with coaching documentation style during every approval cycle, while program staff gain visibility into where coaching would have the greatest impact.

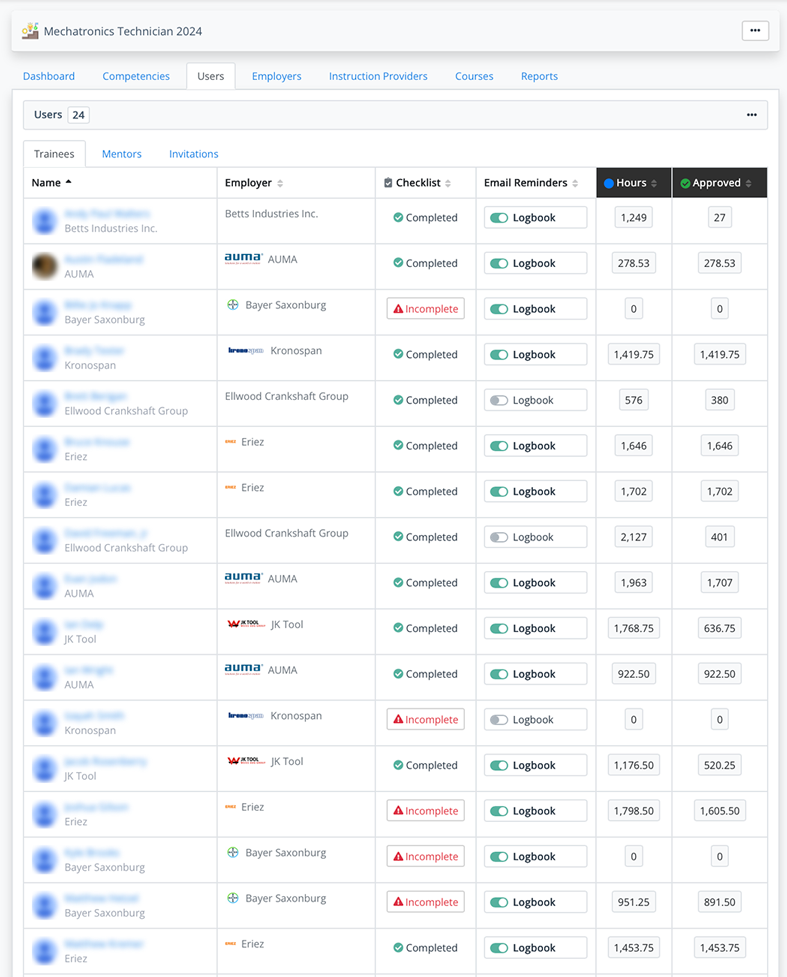

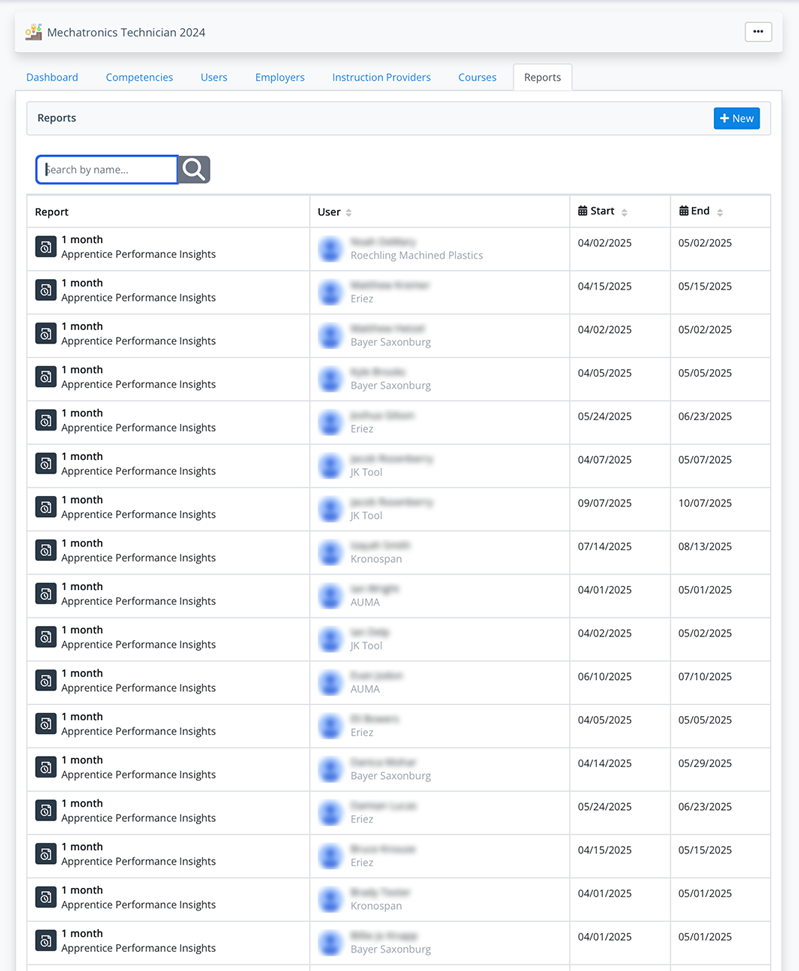

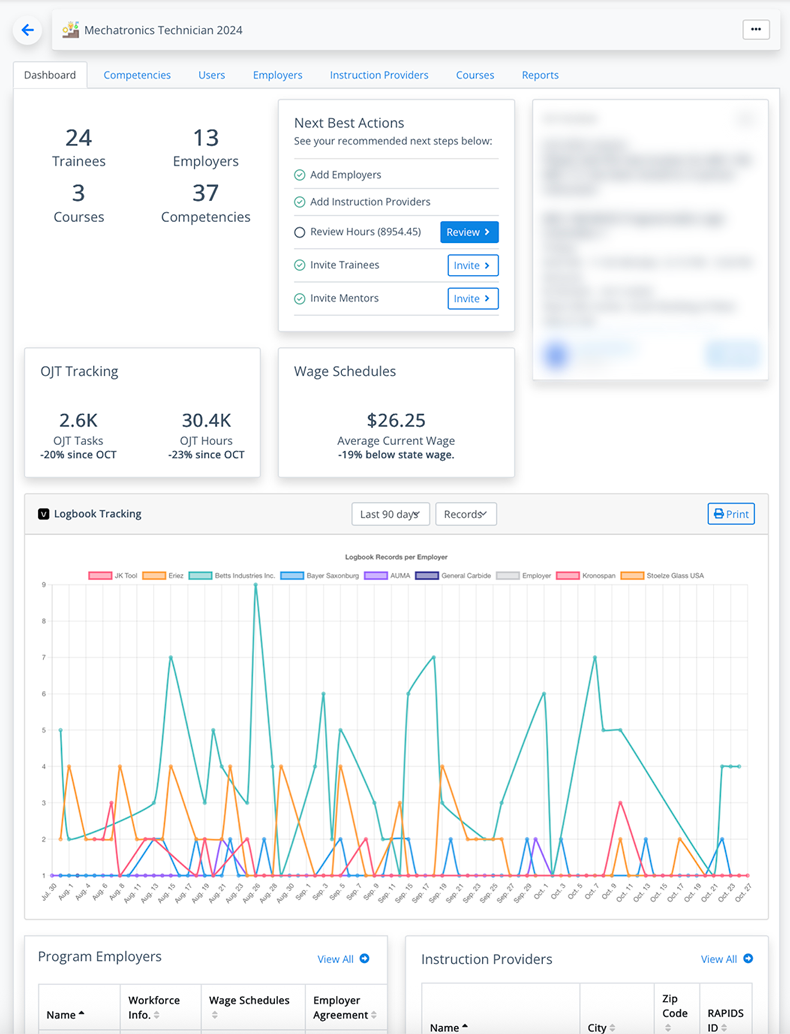

5. Portfolio oversight: validation → action

Program staff manage apprenticeships through dashboards that surface:

- Participation and engagement trends

- Review cadence and approval lag

- Documentation completeness

- Skill coverage and taxonomy consistency

This enables management by exception. Staff intervene where signals show drift—before compliance or performance issues compound.

What observability looks like in practice (2023 → Dec 2025)

GACC's cumulative data shows observability operating at scale:

| Metric | Value |

|---|---|

| OJT records captured | 18,984 |

| Training hours documented | 195,243 |

| Active apprentices | 143 |

| Avg hours per apprentice | 1,365 |

| Transcript coverage | 100% |

| Competency tagging | 99.9% |

| Unique competencies tracked | 250 |

| Avg competencies per record | 3.57 |

| Supervisor approval rate | 88.75% |

Sustained engagement over time:

- 88.8% active 2+ months

- 55.2% active 6+ months

- 32.2% active 12+ months (up to 24)

These metrics represent true observability signals: volume, completeness, verification, and persistence—not just end-state totals.

Transcript intelligence deep dive (GACC-only, Dec 2025)

This is what “observability” looks like at the language layer—where audit readiness is either won or lost.

Evidence quality distribution

Across 16,850 transcripts:

- Median semantic quality score: 9.2

- 75th percentile: 23.3

- 95th percentile: 42.5

- 99th percentile: 63.0

Quality improves over time: average monthly score rose from 12.3 (2024-11) to 19.7 (2025-10).

What low-quality transcripts are missing (coaching diagnostics)

Most low-scoring entries aren’t “wrong”—they’re simply missing specific, detectable signals:

| Missing signal (low score < 40) | % of low-score transcripts |

|---|---|

| Units (psi / volts / rpm / etc.) | 99.8% |

| Outcome language (fixed / verified / resolved) | 94.5% |

| Action verbs (installed / replaced / tested) | 89.3% |

| Numbers / measurements | 75.3% |

| Domain theme keywords (maintenance / quality / safety) | 52.9% |

This aligns with the TQI model: coverage is necessary, but evidence strength requires actions + measurements + outcomes.

Operational insight: long shifts produce weaker narratives

Transcript quality drops as hours per record increase:

- 0–2 hours per record → avg score 20.9

- 8+ hours per record → avg score 12.2

This pattern is consistent with end-of-shift bulk logging: real work happened, but the narrative collapses into generic summaries.

Detectable template patterns (early intervention)

Low-quality entries cluster around repeatable fragments (examples):

worked in maintenancejob changeedm wiretrouble shot10 hrs

These phrases are useful because they can trigger real-time nudges before submission: “Add what you did, what you measured, and what changed.”

Themes in the work (share of records with theme hits)

The transcript stream already contains reliable, domain-shaped signals:

- Production: 18.41%

- Maintenance: 15.55%

- Electrical: 10.53%

- Quality: 6.28%

- Documentation: 6.12%

- Training: 3.18%

- Safety: 0.85%

The takeaway: observability is not just volume—it’s the ability to detect what kind of work is happening and where evidence quality is drifting.

Why approval stays high (and why that’s good)

In the transcript-level slice, approvals remain effectively universal across quality tiers. That’s the intended separation:

- Approval confirms participation and supervisor touch.

- TQI measures evidence strength and guides targeted coaching.

This prevents supervisors from becoming a documentation bottleneck while still letting programs manage evidence quality by exception.

VELA data flow: from work activity to audit-ready evidence

VELA Data Flow: From Work Activity to Audit-Ready Evidence

- Timestamped entry

- Hours worked

- Natural-language transcript

- Transcript normalized

- Hours validated

- Linked to program, cohort, employer

- Competency tags

- Task → skill mapping

- Time + competency alignment

- Review transcript

- Approve / reject

- Initial + timestamp

- Immutable event

- Transcript + competencies

- Mentor-approved

- Tamper-evident hash

- Apprentice progress

- Employer engagement

- Risk & intervention signals

- RAPIDS exports

- Audit artifacts

- AI-generated narratives

Why this is observability, not reporting

Traditional reporting answers: What happened last quarter? GACC's system answers: What's happening now—and where are we drifting?

Because evidence is structured and validated at the point of entry:

- Documentation completeness is continuous and measurable

- Skill demand and utilization become visible through demonstrated competencies

- Governance is standardized at the language layer, with 100% of apprenticeship and competency queries mapped to canonical labels

This makes analytics consistent across employers, sites, and programs—without custom cleanup.

Employer outcomes: observable return on training investment

With VELA, employers move from lagging indicators (hours logged) to leading indicators (skills demonstrated and validated):

- Earlier readiness signals: Identify when apprentices are becoming independently productive

- Targeted coaching: Intervene precisely where skills lag

- Reduced rework risk: Validate task execution before bad habits form

- Better workforce decisions: Promote, deploy, or retain based on demonstrated capability

Why this matters for employers

- Every hour is contextualized: Employers see what work was performed, not just time logged

- Skills are provable: Competencies are demonstrated repeatedly across real tasks

- Supervisors stay aligned: Validation happens in the flow of work

- Risk is reduced: Gaps surface early, before they impact operations

In a retention snapshot across participating companies, 76.39% of known outcomes reflect retention. More importantly, employers gain visibility into what skills are being demonstrated, how consistently apprentices are engaged, and where supervision and validation are strong—or lagging.

This turns apprenticeship from a black box into a transparent talent system.

Before and after: employer perspective

Before VELA: Limited visibility into training ROI

- Training time recorded without task-level detail

- Skills inferred from hours, not evidence

- Performance issues discovered late

- Promotion and deployment decisions based on incomplete data

Result: Apprenticeship value was assumed, not continuously verified.

With VELA: Observable workforce development

- Task-level visibility into daily work performed

- Competency progression tracked continuously

- Supervisor validation embedded in operations

- Workforce decisions grounded in real performance data

Result: Employers gain confidence that apprenticeship investment produces real, job-ready capability.

The discipline behind the system

The results are not accidental. GACC enforces operational discipline through the platform:

- No empty logs: every record includes narrative evidence

- Supervisor behavior is measurable and managed

- Taxonomy and language governance are explicit and continuously improved

The system shapes behavior by design.

Continuous improvement: quality trends over time

VELA doesn't just capture data—it improves documentation behavior. Across recent cohorts, transcript quality scores show a clear upward trend over time, demonstrating that:

- Apprentices learn how to document work more effectively

- Coaching prompts and feedback loops work

- Evidence quality improves without adding administrative burden

This is learning-by-doing, applied to documentation itself. VELA identifies:

- Entries that need improvement

- Apprentices who would benefit from light coaching

- Individuals whose documentation quality is improving fastest

The result: supportive, targeted coaching—not punitive oversight—while keeping the system apprentice-friendly.

Known risks and mitigation

Two risks are actively managed:

- Overly brief task-level transcripts, which can reduce evidentiary clarity

- Taxonomy noise, where unclear or redundant competency labels weaken analytics

Mitigation is operational, not theoretical: supervisors coach "what / why / outcome" logging, and taxonomy hygiene is treated as a first-class governance function.

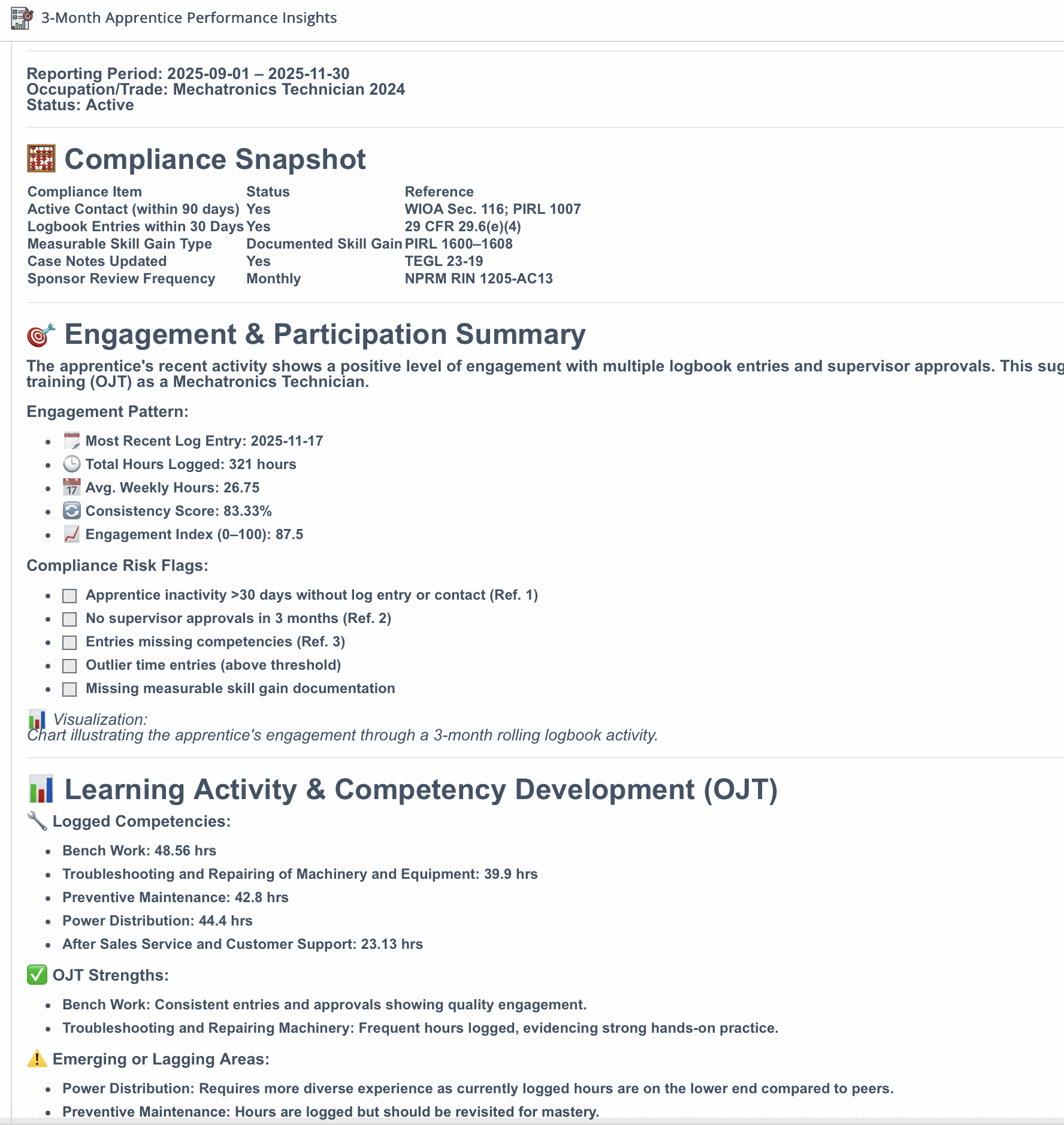

Program deep dive: Mechatronics and Polymer Technician apprenticeships

Below is a data-anchored description of the Mechatronics and Polymer Technician apprenticeships as they actually operate inside the GACC system, grounded in the observed OJT evidence, competency signals, and approval behavior.

Mechatronics Technician Apprenticeship

Program role in the GACC portfolio

Within GACC's apprenticeship portfolio, the Mechatronics Technician program represents the highest-complexity, highest-signal OJT environment. Apprentices operate across electrical, mechanical, fluid, and control systems in live production settings, generating dense, multi-competency evidence.

This is reflected directly in the data: mechatronics logbook entries frequently carry multiple competency tags per record, contributing materially to GACC's average of 3.57 competencies per OJT entry across the portfolio.

What the data shows apprentices actually do

GACC's logbook transcripts and competency mappings show repeated, validated work in:

- Electrical and power systems: Power distribution, electrical drops, sensor diagnostics, proximity devices, and electrical drawing interpretation

- Automation and controls: PLC troubleshooting, HMI interaction, VFD configuration, control sequencing, and fault isolation

- Mechanical and material handling systems: Gearboxes, conveyors, transfer machinery, power transmission, and drive systems

- Fluid and thermal systems: Pneumatics, hydraulics, pumps, chillers, lubrication systems, and temperature control

- Preventive and corrective maintenance: Vibration analysis, diagnostics, quality checks, and breakdown response under production constraints

These activities dominate the highest-frequency demonstrated competencies in the dataset, indicating where real production value is created.

Observability characteristics

- Mechatronics records show high transcript completeness (aligned with the portfolio's 100% transcript coverage)

- Supervisor approval rates track closely with the portfolio average (~88.75%), confirming that complex work is being reviewed and validated—not self-asserted

- Competency breadth is wide, with repeated demonstrations across months, supporting long-horizon skill accumulation rather than one-off task logging

- Role-specific language detection: VELA recognizes distinct technical vocabulary in mechatronics transcripts—PLC, VFD, gearbox, hydraulic, proximity sensor—ensuring logs reflect real maintenance and troubleshooting work, not generic checklists

In short: the GACC data shows mechatronics apprentices operating as production technicians, not trainees-in-name-only.

Polymer Technician Apprenticeship

Program role in the GACC portfolio

The Polymer Technician program produces a different but equally critical signal profile. Where mechatronics emphasizes system integration and troubleshooting, polymer apprenticeships emphasize process stability, material behavior, and quality control.

This shows up in the data as high-frequency, repeatable task evidence tied to material handling and process tuning rather than mechanical breakdowns.

What the data shows apprentices actually do

Logbook transcripts and competency mappings consistently reference:

- Polymer processing operations: Injection molding, extrusion, thermoforming, and machine operation during live production

- Material handling and preparation: Resin identification, drying systems, material blending, and contamination prevention

- Process control and optimization: Monitoring and adjusting temperatures, pressures, flow rates, and cycle times

- Tooling and setup: Mold changes, changeovers, auxiliary equipment setup, and start-up stabilization

- Quality assurance and defect resolution: Identifying warping, sink marks, short shots, surface defects, and implementing corrective actions

- Preventive maintenance: Cleaning, heater bands, chillers, vacuum systems, and routine machine upkeep

These activities correlate with stable, sustained logging patterns, contributing to GACC's strong multi-month engagement rates.

Observability characteristics

- Polymer technician records show repeat competency demonstrations over time, indicating process mastery rather than episodic exposure

- Supervisor approvals validate not just task completion, but process correctness and quality outcomes

- The structured evidence makes it possible to link material decisions directly to quality results—something paper logbooks cannot support

- Role-specific language detection: VELA identifies polymer-specific terminology—extrusion, injection molding, resin, thermoforming, mold changeover—making transcripts meaningful to both employers and industry reviewers

The data reflects technicians who are operating processes to spec, not merely assisting.

How these programs shape GACC's observability profile

Across 143 apprentices, 18,984 OJT records, and 250 unique competencies, Mechatronics and Polymer Technician apprenticeships account for the majority of high-value operational evidence in the GACC system.

Together, they demonstrate:

- Why 3.57 competencies per record is achievable at scale

- How 100% transcript coverage remains feasible even in complex environments

- Why supervisor validation can remain high without bottlenecking production

- How apprenticeship data becomes actionable when tied to real production systems

The GACC data shows that Mechatronics and Polymer Technician apprenticeships are not abstract training programs. They are instrumented production roles, generating validated, observable evidence of skill development inside live manufacturing environments.

That is precisely why GACC can manage these programs at scale: the work itself is observable, governable, and defensible—by design.

Why VELA matters: value by stakeholder

For employers

- Clear visibility into what apprentices actually did—not vague summaries

- Stronger evidence for productivity, safety, and skill progression

- Reduced rework, ambiguity, and compliance risk at audit time

For sponsors & intermediaries

- Audit-ready documentation at scale without manual cleanup

- Less pre-reporting scramble—evidence is structured continuously

- Defensible narratives aligned to competencies and standards

For states & evaluators

- High transcript completeness across the entire portfolio

- Transparent quality signals that distinguish strong from weak evidence

- Consistent, reviewable evidence across programs and employers

The bottom line

GACC achieved real-time observability in advanced manufacturing apprenticeships by converting OJT from retrospective paperwork into a live, validated evidence stream:

- Capture at the edge

- Structure the evidence

- Verify continuously

- Govern by exception

The result is portfolio-scale apprenticeship governance with measurable completeness, verification, and engagement—supporting compliance readiness, employer confidence, and operational control without increasing administrative overhead.

VELA replaces vague, rushed, end-of-period logbooks with real-time, evidence-grade documentation—captured naturally, validated easily, and continuously improved.

It doesn't just record training. It proves it.

Key takeaway: VELA gives employers real-time observability into how training investment turns into productive, skilled workers.

Executive summary: VELA makes apprenticeship ROI visible—turning daily work into verified skill development and measurable workforce readiness.